Reporting via Event-driven Data Warehouse Integration

Please note:Ironclad now uses the OAuth 2.0 Authorization Framework to authenticate API requests. As of November 22nd, 2024, our previous bearer token method of authentication has been deprecated. We are currently in the process of updating this guide with details on how to implement with OAuth 2.0. In the meantime, please refer to our article Migrating to OAuth 2.0.

Overview

This guide walks through the steps of building a real-time event-driven data warehouse solution for Ironclad data and how you can go about visualizing data.

By following this guide, you should be able to answer questions like the following:

- How long are our contract workflows taking (on average / per workflow / etc) from launch to completion?

- How many of our contracts are using a specific field value?

- How many workflows (overall / of type X) have we launched over the past month/quarter/year?

Considerations

The reporting solution presented here involves a custom integration with Ironclad to a data warehouse (BigQuery, in this example). You may want to consider solutions that exist on the market today, which can lead to a faster and more easily managed implementation. For example, some data integration solutions (e.g., Fivetran) may provide pre-built connectors for your data environment that can greatly reduce the risk of error, maintenance, and resources needed for creating your own custom integration. If alternate solutions aren’t the right fit for your current needs, then this guide should help cover some general concepts for building your own solution or applying it to something that may already be built but requires specific interactions with Ironclad webhooks or public API endpoints.

Requirements

- Familiarity/knowledge of: JavaScript, Node.js, Google Cloud Functions, Google BigQuery, and Tableau

- Ironclad environment: You will need an Ironclad production or demo environment with the public API enabled. Additionally, you’ll need to be able to generate data in this environment to test your data warehouse integration.

- API Tokens: In order to use the Ironclad public API, you will need to generate an API token for your Ironclad environment. Tokens can be generated by logging into Ironclad and navigating to our Name] → → Company Settings → API.

Solution Overview

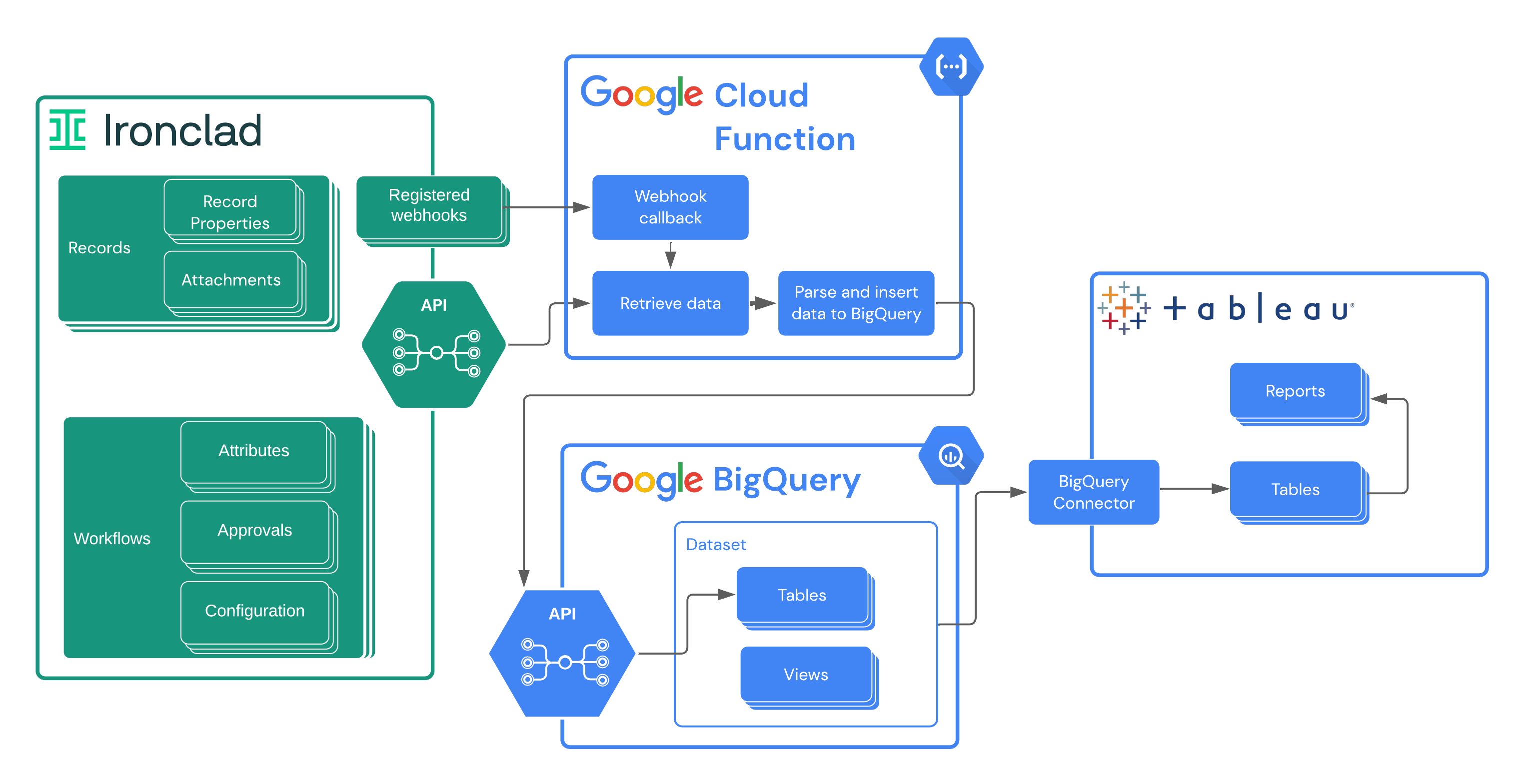

This solution guide will utilize Ironclad webhooks to trigger calls to the Ironclad public API in order to pull data and insert into the relevant BigQuery tables.

This solution guide uses the following technologies:

- A Node.js script running in a server environment (e.g., Google Cloud Run/App Engine/etc.) to facilitate retrieving and pushing of data. Our example will deploy Node.js code as a Google Cloud Function.

- Google BigQuery as the data warehouse storage solution

- Tableau reports/dashboards for visualization

The figure below shows the interaction of these components with your Ironclad environment.

In describing this solution, we're going to progress through these components in the following order:

- We will start with a description of the setup of the BigQuery data warehouse, as the heart of this overall solution.

- We will then describe the custom Node.js code that will be used to implement the webhook callbacks and API calls needed to capture Ironclad data and populate the BigQuery data

- With the webhook callbacks described, we'll then discuss the configuration of these webhook callbacks in Ironclad.

- Finally, we'll show how the BigQuery data can be visualized in different ways in a Tableau dashboard.

BigQuery Set Up

Requirements

You will need the following in order to proceed with configuring BigQuery for this solution:

- A Google service account with the appropriate permissions to interact with BigQuery

- You must enable the "BigQuery API" within your Google Cloud account - this can be done from the Google Cloud Platform console.

Data Model Overview

This guide will make use of a data model (in the form of a BigQuery dataset) containing tables used for receiving and parsing Ironclad workflow event data. Most of the tables in this data model align 1:1 with the contract workflow events generated by Ironclad. There are also a few "reference" tables that are used to store additional details for specific event types, in order to support metrics across contract types and executed contract records.

Event Data

Workflows Launched

This object will contain data about the workflow that was launched, which can be helpful if we need to retrieve data of the original state.

Workflows Approvals

This object will hold metadata on approval status changes that occur on any workflow where approvals are triggered. For example, storing this data can allow us to get insight into the performance of workflows that have approvals versus workflows that do not.

Workflows Completed

This object will contain data about the workflow that was completed.

Workflows Cancelled

This object will contain data about the workflow that was cancelled.

Workflows Updated

This object will contain data about a workflow that was updated.

Reference Data

Workflows Launch Configuration

This object will hold metadata about the workflow template used to launch the workflow. For example, we’ll want to store the title of the template used for every launched workflow in the case we want to find patterns of a specific template or between other workflow templates used.

Records

This object will contain data with records created from the completed workflows.

1. Create Dataset

You’ll need a dataset created in BigQuery to house the tables. You can do this from either Cloud Console, BigQuery command-line tools, or with the BigQuery Node SDK.

2. Create Tables

For this guide, we’ll be creating 6 tables in our dataset. Each table will hold specific information relating to the webhook events and be structured around the above-mentioned models in the previous “Data Model Overview” section.

Workflows Launch Configuration Table

Table name: workflows_launch_configuration

Schema

| Field | Type | Description |

|---|---|---|

| workflowId | STRING | The Ironclad unique ID for the workflow launched |

| templateId | STRING | The Ironclad unique ID for the template used for the workflow |

| templateName | STRING | The name of the Ironclad template used for the workflow |

| templateSchema | STRING | The schema (in JSON format) of the template used for the workflow |

| webhookEventTime | TIMESTAMP | The time when the workflow was launched |

Workflows Approvals Table

Table name: workflows_approvals

Schema

| Field | Type | Description |

|---|---|---|

| workflowId | STRING | The Ironclad unique ID for the workflow that was approved |

| event | STRING | The name of the webhook event |

| status | STRING | The status of the workflow after this approval event |

| userId | STRING | The Ironclad ID of the user that approved the workflow |

| userEmail | STRING | The email of the user that approved the workflow |

| approvalName | STRING | The name of the approval group for this approval |

| approvalId | STRING | The Ironclad unique ID for this approval |

| webhookEventTime | TIMESTAMP | The time when the workflow was approved |

Workflows Signer Table

Table name: workflows_signer

Schema

| Field | Type | Description |

|---|---|---|

| workflowId | STRING | The Ironclad unique ID for the workflow that was approved |

| title | STRING | The title of the workflow |

| signer | STRING | The status of the signature request for the signer |

| webhookEventTime | TIMESTAMP | The time when the workflow was approved |

Workflows Launched, Workflows Completed, Workflows Cancelled, and Workflows Updated Tables

Tables names: workflows_launched, workflows_completed, workflows_cancelled, workflows_updated

All of these tables will use the same table definition, shown below.

Schema

| Field | Type | Description |

|---|---|---|

| id | STRING | The Ironclad unique ID for the workflow associated with the event |

| title | STRING | The title of the workflow associated with this event |

| template | STRING | The Ironclad unique ID for the template used for the workflow |

| step | STRING | The stage of the workflow after completion of this event |

| status | STRING | The status of the workflow after completion of this event |

| created | DATETIME | The time of creation of the associated workflow |

| lastUpdated | DATETIME | The time of last update to the associated workflow |

| agreementDate | DATETIME | The value of the agreementDate field of the workflow after completion of this event |

| attributes | STRING | A JSON string containing the overall set of attributes and values for the workflow after completion of this event |

| webhookEventTime | TIMESTAMP | The time this event fired |

Records Table

Table name: records

Schema

| Field | Type | Description |

|---|---|---|

| id | STRING | The Ironclad unique ID for the workflow that generated this record |

| type | STRING | The type of this record |

| name | STRING | The name of the record entry |

| lastUpdated | DATETIME | The time of last update to this record |

| agreementDate | DATETIME | The value of the agreementDate field in this record |

| properties | STRING | A JSON string containing the attributes and value for the record |

| workflowId | STRING | The Ironclad unique ID for the workflow that generated this record |

| webhookEventTime | TIMESTAMP | The time of the workflow completed event that created this record |

3. Create Views

In addition to the tables described above, we will also create views that will be used to parse and clean data before being used by Tableau. Specifically, we will be using these views to:

- Extract specific field values from the JSON data we are storing in specific table fields

- Remove any unnecessary data not needed for visualization

- Do any necessary joins between tables

Completed Workflows View

This view is used for querying data stored about completed workflows for visualization within Tableau. It joins specific fields from the workflows_completed and workflow_launch_configuration tables, along with data extracted from the workflow attributes stored in JSON in the workflow_completed.attributes field.

Example Query

SELECT

WC.id,

WC.title,

WC.template,

WC.step,

WC.status,

WC.created,

WC.lastUpdated,

WC.agreementDate,

WC.webhookEventTime,

JSON_VALUE(WC.attributes, '$.contractExecutionDate') as contractExecutionDate,

JSON_VALUE(WC.attributes, '$.paperSource') as paperSource,

WLC.templateName

FROM YOUR_BIGQUERY_PROJECT_ID.YOUR_DATASET_ID.workflows_completed WC

JOIN YOUR_BIGQUERY_PROJECT_ID.YOUR_DATASET_ID.workflows_launch_configuration WLC

ON WC.id = WLC.workflowIdRecords View

This view is used for querying data stored about records for visualization within Tableau. It merges specific fields from the records table with data extracted from the JSON data stored in the record.properties field.

Example Query

SELECT

id,

type,

name,

lastUpdated,

agreementDate,

workflowId,

webhookEventTime,

JSON_VALUE(properties, '$.department.value') as department,

JSON_VALUE(properties, '$.counterpartyName.value') as counterpartyName

FROM YOUR_BIGQUERY_PROJECT_ID.YOUR_DATASET_ID.recordsWorkflow Approvals View

This view is used for querying data stored about approvals for visualization within Tableau. It joins specific fields from the workflow_approvals and workflow_launch_configuration tables.

Example Query

SELECT

WA.workflowId,

WA.event,

WA.status,

WA.userId,

WA.userEmail,

WA.approvalName,

WA.approvalId,

WA.fullApprovalData,

WA.webhookEventTime,

WLC.templateName

FROM YOUR_BIGQUERY_PROJECT_ID.YOUR_DATASET_ID.workflows_approvals WA

LEFT JOIN YOUR_BIGQUERY_PROJECT_ID.YOUR_DATASET_ID.workflows_launch_configuration WLC

ON WA.workflowId = WLC.workflowIdWorkflow Signers View

This view is used for querying data stored about signers.

Example Query

SELECT

workflowId,

title,

JSON_VALUE(signer, '$.signer.roleName') as roleName,

JSON_VALUE(signer, '$.signer.name') as name,

JSON_VALUE(signer, '$.signer.email') as email,

JSON_VALUE(signer, '$.signer.signatureStatus.status') as signerStatus,

webhookEventTime

FROM YOUR_BIGQUERY_PROJECT_ID.YOUR_DATASET_ID.workflows_signerGoogle Cloud Function Set Up

Node.js Code Dependencies

The Google Cloud Function we use to populate the data warehouse is written in Node.js. The Node.js code uses the following Node packages, available through npm/yarn.

- @google-cloud/bigquery

- @google-cloud/functions-framework (for testing locally)

- axios

- dotenv

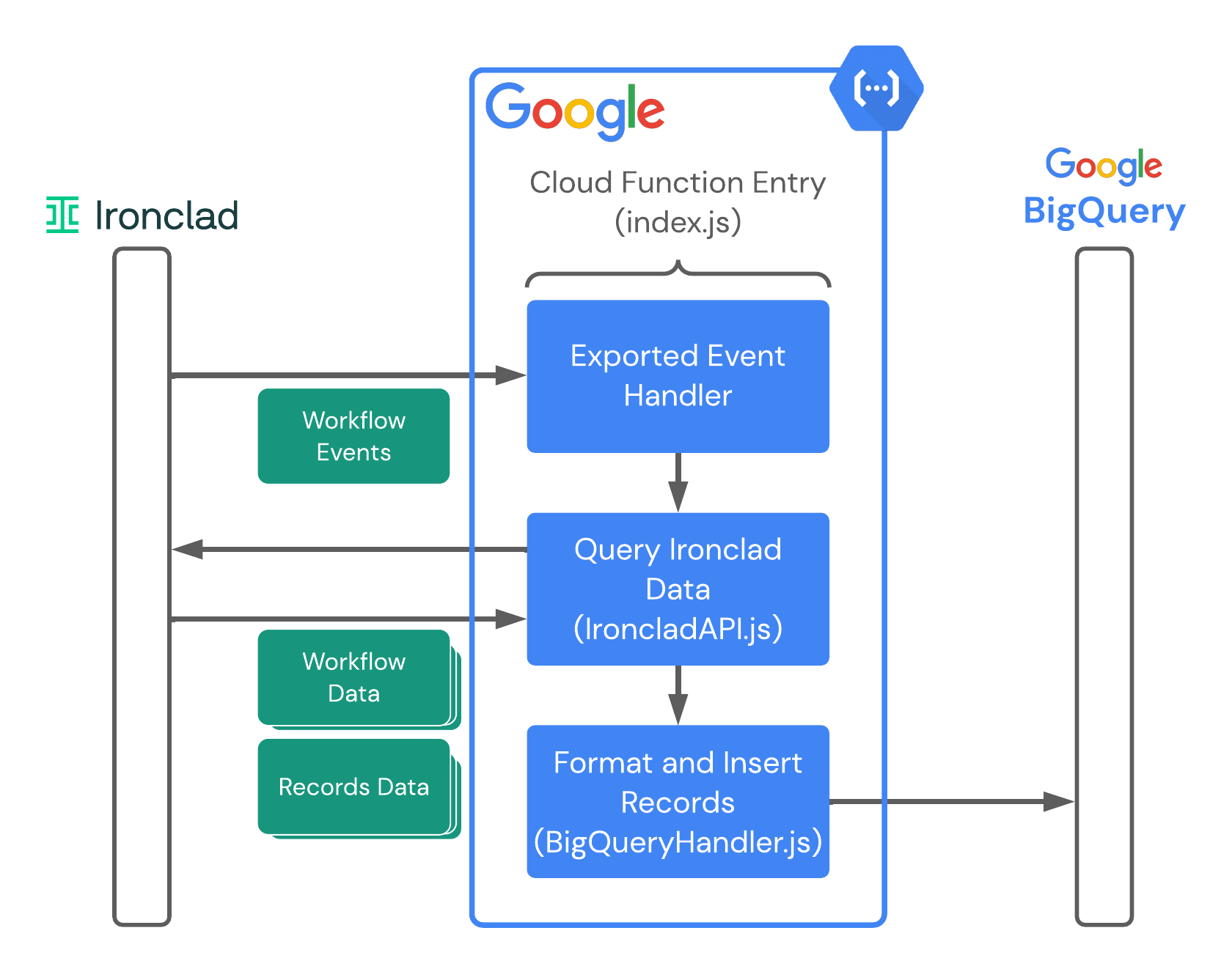

Project Overview Diagram

The figure below shows how the major components of the Node.js code work together to pull data from Ironclad to populate the BigQuery data tables.

In the remainder of this section, we'll show the code components that make up the overall Node.js process that is deployed to Google Cloud.

Helpers

helpers.js

General utilities are included here that need to be used for checking/formatting data before being sent to BigQuery.

// Used to standardize date formatting in some edge cases.

const formatDate = (dateString) => {

const date = new Date(dateString);

return date.toISOString().slice(0, -1);

};

// Checks and returns key value or returns null if none found.

const keyObjCheck = (key, obj) => {

if (key in obj) return obj[key];

return null;

};

// BigQuery requires data to be sent as Newline Delimited JSON.

// This flattens an object for BigQuery to accept the data.

const formatObjectToColumn = (obj) => {

try {

const jsonString = JSON.stringify(obj);

return jsonString.replace(/\n/g, '');

} catch (error) {

console.error(error);

return null;

}

};

// Additional check and formatting for dates.

const checkAndFormatDateIfExists = (key, obj) => {

try {

if (key in obj) return formatDate(obj[key]);

else return null;

} catch (error) {

console.error(error);

return null;

}

};

module.exports = {

formatDate,

keyObjCheck,

formatObjectToColumn,

checkAndFormatDateIfExists,

};Models

Workflow.js

The Workflow class is used to parse and format data around the workflow_launched, workflow_completed, workflow_updated, and workflow_cancelled webhook events.

const {

formatObjectToColumn,

checkAndFormatDateIfExists,

} = require('../helpers');

class Workflow {

constructor(

workflowData,

timestamp,

) {

this.id = workflowData.id;

this.title = workflowData.title;

this.template = workflowData.template;

this.step = workflowData.step;

this.status = workflowData.status;

this.created = checkAndFormatDateIfExists(

'created',

workflowData,

);

this.lastUpdated = checkAndFormatDateIfExists(

'lastUpdated',

workflowData,

);

this.agreementDate = checkAndFormatDateIfExists(

'agreementDate',

workflowData.attributes,

);

this.attributes = formatObjectToColumn(workflowData.attributes);

this.webhookEventTime = timestamp;

}

}

module.exports = { Workflow };WorkflowApproval.js

This class is used to parse webhook data from the workflow_approval_status_changed event.

const { keyObjCheck, formatObjectToColumn } = require('../helpers');

class WorkflowApproval {

constructor(webhookData, timestamp, fullApprovalData) {

this.workflowId = webhookData.workflowID;

this.event = webhookData.event;

this.status = webhookData.status;

this.userId = keyObjCheck('userID', webhookData);

this.userEmail = keyObjCheck('userEmail', webhookData);

this.approvalName = webhookData.approvalName;

this.approvalId = webhookData.approvalID;

this.fullApprovalData = formatObjectToColumn(fullApprovalData);

this.webhookEventTime = timestamp;

}

}

module.exports = { WorkflowApproval };WorkflowSigner.js

This class is used to parse webhook data from the workflow_updated event when the workflow step is Sign.

const {formatObjectToColumn,} = require('../helpers');

class WorkflowSigner {

constructor(workflowId, title, signer, timestamp,) {

this.workflowId = workflowId;

this.title = title;

this.signer = formatObjectToColumn(signer);

this.webhookEventTime = timestamp;

}

}

module.exports = { WorkflowSigner };WorkflowConfigSnapshot.js

This class is used to parse data around the workflow_launched webhook event.

const { formatObjectToColumn } = require('../helpers');

class WorkflowConfigSnapshot {

constructor(

templateId,

workflowId,

configData,

timestamp,

) {

this.workflowId = workflowId;

this.templateId = templateId;

this.templateName = configData.name;

this.templateSchema = formatObjectToColumn(configData.schema);

this.webhookEventTime = timestamp;

}

}

module.exports = { WorkflowConfigSnapshot };Record.js

This class is used to parse and format data around the workflow_launched webhook event.

const {

formatObjectToColumn,

checkAndFormatDateIfExists,

} = require('../helpers');

class Record {

constructor(

recordData,

timestamp,

) {

this.id = recordData.id;

this.type = recordData.type;

this.name = recordData.name;

this.lastUpdated = checkAndFormatDateIfExists('lastUpdated', recordData);

this.agreementDate = ('agreementDate' in recordData.properties) ?

checkAndFormatDateIfExists('value', recordData.properties.agreementDate) :

null;

this.properties = formatObjectToColumn(recordData.properties);

this.workflowId = recordData.source.workflowId;

this.webhookEventTime = timestamp;

}

}

module.exports = { Record };Ironclad API

IroncladAPI.js

This class is used for directly interacting with the Ironclad Public API.

const axios = require('axios').default;

const API_KEY = process.env.IRONCLAD_API_KEY;

class IroncladAPI {

constructor(apiEnvironment = 'demo') {

this.apiVersion = 'v1';

this.apiEnvironment = apiEnvironment;

this.requestConfig = { headers: { 'Authorization': `Bearer ${API_KEY}`} };

}

async retrieveWorkflowData(workflowID) {

const response = await axios.get(

`${this.apiUrl()}/workflows/${workflowID}`,

this.requestConfig,

);

return response.data;

}

async retrieveWorkflowApprovers(workflowID) {

const response = await axios.get(

`${this.apiUrl()}/workflows/${workflowID}/approvals`,

this.requestConfig,

);

return response.data;

}

async retrieveWorkflowSigners(workflowID) {

const response = await axios.get(

`${this.apiUrl()}/workflows/${workflowID}/signatures`,

this.requestConfig,

);

return response.data;

}

async retrieveRecordData(recordID) {

const response = await axios.get(

`${this.apiUrl()}/records/${recordID}`,

this.requestConfig,

);

return response.data;

}

async retrieveWorkflowConfig(templateId) {

const response = await axios.get(

`${this.apiUrl()}/workflow-schemas/${templateId}?form=launch`,

this.requestConfig,

);

return response.data;

}

apiUrl() {

switch (this.apiEnvironment) {

case 'demo':

return `https://demo.ironcladapp.com/public/api/${this.apiVersion}`;

case 'production':

return `https://na1.ironcladapp.com/public/api/${this.apiVersion}`;

default:

return `https://na1.ironcladapp.com/public/api/${this.apiVersion}`;

}

}

}

module.exports = { IroncladAPI };BigQuery

BigQueryStatics.js

This class is used to store details needed for using and connecting to BigQuery.

require('dotenv').config();

const BIGQUERY_DATASET_ID = process.env.BIGQUERY_DATASET_ID;

class BigQueryStatics {

// Returns the ID of the BigQuery Dataset, which is specified

// in the .env file of thie example.

static get datasetId() {

return BIGQUERY_DATASET_ID;

}

// Method used for conveniently retrieving the identifier or schema of a BigQuery

// table based on the specified table name.

static get tables() {

return {

workflowsLaunchConfiguration: this.workflowsLaunchConfigurationTable,

workflowsLaunched: this.workflowsLaunchedTable,

workflowsApprovals: this.workflowsApprovalsTable,

workflowsSigner: this.workflowsSignerTable,

workflowsCompleted: this.workflowsCompletedTable,

workflowsCancelled: this.workflowsCancelledTable,

workflowsUpdated: this.workflowsUpdatedTable,

records: this.recordsTable,

};

}

// The query used when creating the "Completed Workflows View".

static completedWorkflowsViewQuery(

projectIdentifier,

datasetIdentifier,

sourceTableIdentifier,

) {

return `

SELECT

WC.id,

WC.title,

WC.template,

WC.step,

WC.status,

WC.created,

WC.lastUpdated,

WC.agreementDate,

WC.webhookEventTime,

WLC.templateName

FROM ${projectIdentifier}.${datasetIdentifier}.${sourceTableIdentifier} WC

LEFT JOIN ${projectIdentifier}.${datasetIdentifier}.${sourceTableIdentifier} WLC

ON WC.template = WLC.templateId

`;

}

// The query used when creating the "Records View".

static recordsViewQuery(

projectIdentifier,

datasetIdentifier,

sourceTableIdentifier,

) {

return `

SELECT

id,

type,

name,

lastUpdated,

agreementDate,

workflowId,

webhookEventTime,

JSON_VALUE(properties, '$.department.value') as department,

JSON_VALUE(properties, '$.counterpartyName.value') as counterpartyName

FROM ${projectIdentifier}.${datasetIdentifier}.${sourceTableIdentifier}

`;

}

static workflowsApprovalsQuery(

projectIdentifier,

datasetIdentifier,

sourceTableIdentifier,

) {

return `

SELECT

WA.workflowId,

WA.event,

WA.status,

WA.userId,

WA.userEmail,

WA.approvalName,

WA.approvalId,

WA.fullApprovalData,

WA.webhookEventTime,

WLC.templateName

FROM ${projectIdentifier}.${datasetIdentifier}.${sourceTableIdentifier} WA

LEFT JOIN ${projectIdentifier}.${datasetIdentifier}.workflows_launch_configuration WLC

ON WA.workflowId = WLC.workflowId

`;

}

static workflowSignerQuery(

projectIdentifier,

datasetIdentifier,

sourceTableIdentifier,

) {

return `

SELECT

workflowId,

title,

JSON_VALUE(signer, '$.signer.roleName') as roleName,

JSON_VALUE(signer, '$.signer.name') as name,

JSON_VALUE(signer, '$.signer.email') as email,

JSON_VALUE(signer, '$.signer.signatureStatus.status') as signerStatus,

webhookEventTime

FROM ${projectIdentifier}.${datasetIdentifier}.${sourceTableIdentifier}

`;

}

// The schema used for workflows_launched, workflows_completed, workflows_cancelled,

// and workflows_updated tables.

static _workflowTableSchema() {

return [

{ name: 'id', type: 'STRING' },

{ name: 'title', type: 'STRING' },

{ name: 'template', type: 'STRING' },

{ name: 'step', type: 'STRING' },

{ name: 'status', type: 'STRING' },

{ name: 'created', type: 'DATETIME' },

{ name: 'lastUpdated', type: 'DATETIME' },

{ name: 'agreementDate', type: 'DATETIME' },

{ name: 'attributes', type: 'STRING' },

{ name: 'webhookEventTime', type: 'TIMESTAMP' },

];

}

// The schema used for the workflows_approvals table.

static _workflowApprovalsTableSchema() {

return [

{ name: 'workflowId', type: 'STRING' },

{ name: 'event', type: 'STRING' },

{ name: 'status', type: 'STRING' },

{ name: 'userId', type: 'STRING' },

{ name: 'userEmail', type: 'STRING' },

{ name: 'approvalName', type: 'STRING' },

{ name: 'approvalId', type: 'STRING' },

{ name: 'fullApprovalData', type: 'STRING' },

{ name: 'webhookEventTime', type: 'TIMESTAMP' },

];

}

// The schema used for the records table.

static _recordsTableSchema() {

return [

{ name: 'id', type: 'STRING' },

{ name: 'type', type: 'STRING' },

{ name: 'name', type: 'STRING' },

{ name: 'lastUpdated', type: 'DATETIME' },

{ name: 'agreementDate', type: 'DATETIME' },

{ name: 'properties', type: 'STRING' },

{ name: 'workflowId', type: 'STRING' },

{ name: 'webhookEventTime', type: 'TIMESTAMP' },

];

}

// The schema used the workflows_launch_configuration table.

static _workflowsLaunchConfigurationSchema() {

return [

{ name: 'workflowId', type: 'STRING' },

{ name: 'templateId', type: 'STRING' },

{ name: 'templateName', type: 'STRING' },

{ name: 'templateSchema', type: 'STRING' },

{ name: 'webhookEventTime', type: 'TIMESTAMP' },

];

}

static _workflowsSignerSchema() {

return [

{ name: 'workflowId', type: 'STRING' },

{ name: 'title', type: 'STRING' },

{ name: 'signer', type: 'STRING' },

{ name: 'webhookEventTime', type: 'TIMESTAMP' },

];

}

// The identifier and schema of the workflows_launched table.

static get workflowsLaunchedTable() {

return Object.freeze({

tableIdentifier: 'workflows_launched',

schema: this._workflowTableSchema(),

});

}

// The identifier and schema of the workflows_launch_configuration table.

static get workflowsLaunchConfigurationTable() {

return Object.freeze({

tableIdentifier: 'workflows_launch_configuration',

schema: this._workflowsLaunchConfigurationSchema(),

});

}

// The identifier and schema of the workflows_approvals table.

static get workflowsApprovalsTable() {

return Object.freeze({

tableIdentifier: 'workflows_approvals',

schema: this._workflowApprovalsTableSchema(),

});

}

// The identifier and schema of the workflows_signer table.

static get workflowsSignerTable() {

return Object.freeze({

tableIdentifier: 'workflows_signer',

schema: this._workflowsSignerSchema(),

});

}

// The identifier and schema of the workflows_completed table.

static get workflowsCompletedTable() {

return Object.freeze({

tableIdentifier: 'workflows_completed',

schema: this._workflowTableSchema(),

});

}

// The identifier and schema of the workflows_cancelled table.

static get workflowsCancelledTable() {

return Object.freeze({

tableIdentifier: 'workflows_cancelled',

schema: this._workflowTableSchema(),

});

}

// The identifier and schema of the workflows_updated table.

static get workflowsUpdatedTable() {

return Object.freeze({

tableIdentifier: 'workflows_updated',

schema: this._workflowTableSchema(),

});

}

// The identifier and schema of the records table.

static get recordsTable() {

return Object.freeze({

tableIdentifier: 'records',

schema: this._recordsTableSchema(),

});

}

}

module.exports = { BigQueryStatics };bigQueryHandler.js

The insertStreamingData function is used to insert prepared data to the specified BigQuery dataset and table.

const { BigQuery } = require('@google-cloud/bigquery');

const BIGQUERY_PROJECT_ID = process.env.BIGQUERY_PROJECT_ID;

// Options used for the BigQuery Node.js SDK.

const options = {

keyFilename: `${__dirname}/service-account-file.json`,

projectId: BIGQUERY_PROJECT_ID,

};

// Used to prepared data into a specified dataset ID and table ID.

const insertStreamingData = async ({

datasetId = '',

tableId = '',

dataRows = [],

}) => {

if (datasetId == '' || tableId == '') {

throw new Error('Dataset ID and Table ID are required.');

}

const bigquery = new BigQuery(options);

return await bigquery

.dataset(datasetId)

.table(tableId)

.insert(dataRows);

};

module.exports = { insertStreamingData };Main Components

WebhookHandler.js

The WebhookHandler class is used to handle the processing of webhook events by using the relevant method (e.g., workflowLaunched for a workflow_launched webhook event type).

const { BigQuery } = require('@google-cloud/bigquery');

const { IroncladAPI } = require('./IroncladAPI');

const {

Workflow,

Record,

WorkflowApproval,

WorkflowConfigSnapshot,

} = require('./models');

const {

insertStreamingData,

BigQueryStatics,

} = require('./BigQuery');

class WebhookHandler {

constructor(requestBody) {

this.requestBody = requestBody;

this.timestamp = BigQuery.timestamp(new Date());

this.icApi = new IroncladAPI();

}

// Used for preparing and storing data into both the workflows_launched

// and workflows_launch_configuration BigQuery tables.

async workflowLaunched() {

const { templateID, workflowID } = this.requestBody.payload;

await this._handleWorkflowLaunchConfig(templateID, workflowID);

return await this._handleWorkflowLaunched(workflowID);

}

// Used for preparing and storing data into both the workflows_completed and

// records BigQuery tables.

async workflowCompleted() {

const { workflowID, recordIDs } = this.requestBody.payload;

const workflowData = await this.icApi.retrieveWorkflowData(workflowID);

const workflowRowData = [new Workflow(workflowData, this.timestamp)];

await this._insertDataToBigQuery(

workflowRowData,

BigQueryStatics.tables.workflowsCompleted.tableIdentifier,

);

return await this._processRecordsData(recordIDs);

}

// Used for preparing and storing data into the workflows_cancelled

// BigQuery table.

async workflowCancelled() {

const { workflowID } = this.requestBody.payload;

const workflowData = await this.icApi.retrieveWorkflowData(workflowID);

const workflowRowData = [new Workflow(workflowData, this.timestamp)];

return await this._insertDataToBigQuery(

workflowRowData,

BigQueryStatics.tables.workflowsCancelled.tableIdentifier,

);

}

// Used for preparing and storing data into the workflows_updated

// BigQuery table.

async workflowUpdated() {

const { workflowID } = this.requestBody.payload;

const workflowData = await this.icApi.retrieveWorkflowData(workflowID);

const workflowRowData = [new Workflow(workflowData, this.timestamp)];

const bigQueryUpdated = await this._insertDataToBigQuery(

workflowRowData,

BigQueryStatics.tables.workflowsUpdated.tableIdentifier,

);

if (workflowData.step === 'Sign') {

await this.workflowSigners();

}

return bigQueryUpdated;

}

/**

* Handles and inserts signers data into BigQuery from the

* workflow_updated webhook event type.

* @return {Promise} The response from BigQuery insert.

*/

async workflowSigners() {

const { workflowID } = this.requestBody.payload;

const workflowSignersData = await this.icApi.retrieveWorkflowSigners(

workflowID,

);

const workflowSignersRowData = [];

for (const signerData of workflowSignersData.signers) {

workflowSignersRowData.push(

new WorkflowSigner(

workflowSignersData.workflowId,

workflowSignersData.title,

signerData,

this.timestamp),

);

}

await this._insertDataToBigQuery(

workflowSignersRowData,

BigQueryStatics.tables.workflowsSigner.tableIdentifier,

);

}

// Used for preparing and storing data into the workflow workflow_approval_status_changed

// BigQuery table.

async workflowApprovalStatusChange() {

const fullApprovalData = await this.icApi.retrieveWorkflowApprovers(this.requestBody.payload.workflowID);

const rowData = [new WorkflowApproval(

this.requestBody.payload,

this.timestamp,

fullApprovalData,

)];

return await this._insertDataToBigQuery(

rowData,

BigQueryStatics.tables.workflowsApprovals.tableIdentifier,

);

}

// Used for preparing and storing data into the workflow_launch_configuration

// BigQuery table.

async _handleWorkflowLaunchConfig(templateId, workflowId) {

const configData = await this.icApi.retrieveWorkflowConfig(

templateId,

);

const rowData = [new WorkflowConfigSnapshot(

templateId,

workflowId,

configData,

this.timestamp,

)];

return await this._insertDataToBigQuery(

rowData,

BigQueryStatics.tables.workflowsLaunchConfiguration.tableIdentifier,

);

}

// Used for preparing and storing data into the workflows_launched

// BigQuery table.

async _handleWorkflowLaunched(workflowId) {

const workflowData = await this.icApi.retrieveWorkflowData(workflowId);

const workflowRowData = [new Workflow(workflowData, this.timestamp)];

return await this._insertDataToBigQuery(

workflowRowData,

BigQueryStatics.tables.workflowsLaunched.tableIdentifier,

);

}

// Used to handle processing and storing data into the records

// BigQuery table.

async _processRecordsData(recordIds = []) {

if (recordIds.length === 0) return;

const recordsToGet = recordIds.map(

(recordId) => this.icApi.retrieveRecordData(recordId),

);

const recordsData = await Promise.all(recordsToGet);

const processedRecords = recordsData.map(

(recordData) => new Record(recordData, this.timestamp),

);

return await this._insertDataToBigQuery(

processedRecords,

BigQueryStatics.tables.records.tableIdentifier,

);

}

// Used to call the insertStreamingData method for inserting

// data into BigQuery.

async _insertDataToBigQuery(rowData, tableIdentifier) {

return await insertStreamingData({

datasetId: BigQueryStatics.datasetId,

tableId: tableIdentifier,

dataRows: rowData,

});

}

}

module.exports = { WebhookHandler };index.js

This file is the entry point for the function and handles processing the integration.

require('dotenv').config();

const { WebhookHandler } = require('./src/WebhookHandler');

/**

* HTTP Cloud Function.

*

* @param {Object} req Cloud Function request context.

* @param {Object} res Cloud Function response context.

*/

exports.handleWebhook = async (req, res) => {

try {

if (

!req.body &&

!req.body.payload &&

!req.body.payload.event

) return res.status(200).end();

console.log(

`Received ${req.body.payload.event} for workflow ${req.body.payload.workflowID}`,

);

const webhookHandler = new WebhookHandler(req.body);

// Used to trigger the appropriate webhookHandler method based on

// the incoming event type from the webhook.

switch (req.body.payload.event) {

case 'workflow_launched':

await webhookHandler.workflowLaunched();

break;

case 'workflow_approval_status_changed':

await webhookHandler.workflowApprovalStatusChange();

break;

case 'workflow_completed':

await webhookHandler.workflowCompleted();

break;

case 'workflow_cancelled':

await webhookHandler.workflowCancelled();

break;

case 'workflow_updated':

await webhookHandler.workflowUpdated();

break;

default:

break;

}

console.log('finished');

res.status(200).end();

} catch (error) {

console.log(error);

console.log('error handling webhook', JSON.stringify(error));

res.status(500).end();

}

};Deploying the Google Cloud Function

The code above can be deployed as a Google Cloud Function using the Google Cloud command-line interface (CLI), ideally as a script in your package.json, e.g.:

gcloud functions deploy MY_CLOUD_FUNCTION_NAME --entry-point handleWebhook --runtime nodejs16 --trigger-http --allow-unauthenticated

You can also review the different methods of deploying Google Cloud Functions by reviewing the documentation provided by Google.

Ironclad Set Up

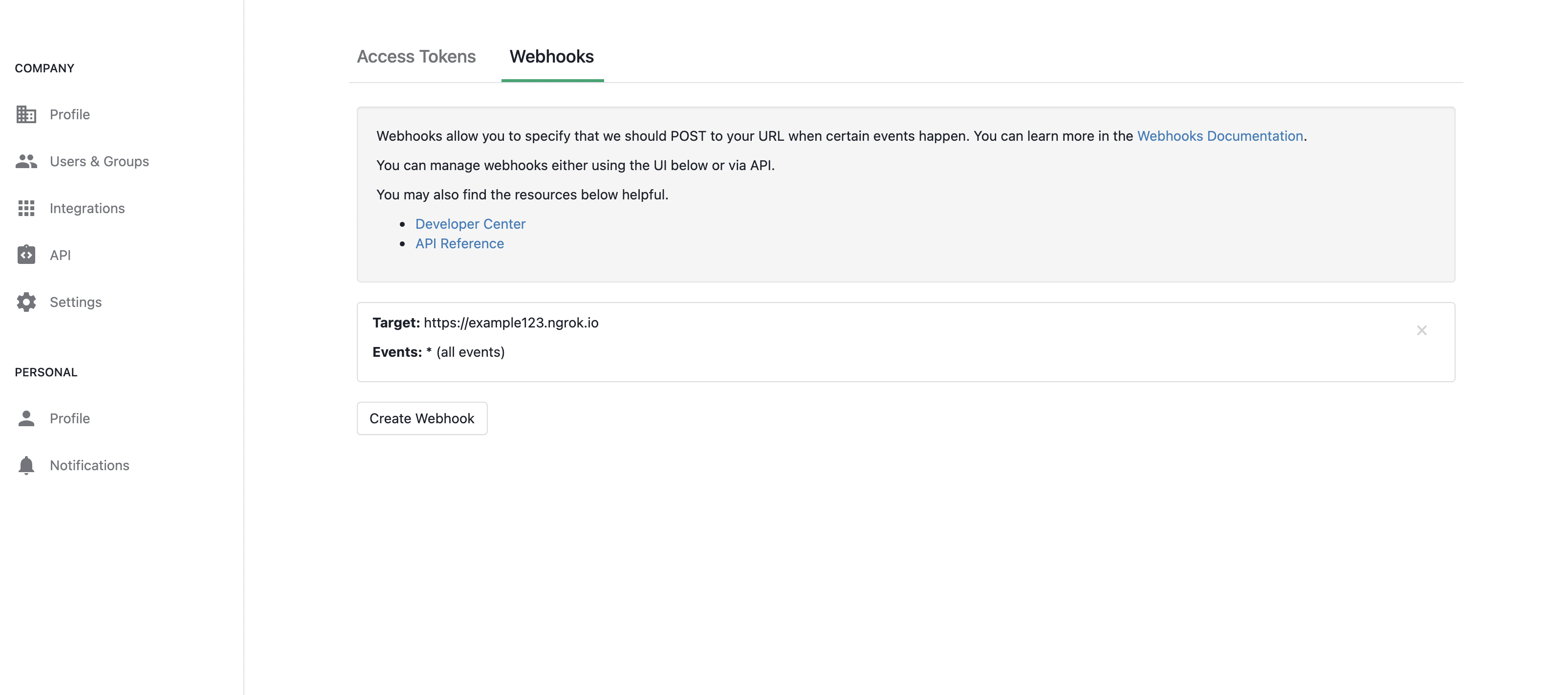

Now that we've set up the BigQuery data model and the Node.js code that will receive events from Ironclad to populate this data model, we can look at how to configure the webhook callbacks in Ironclad, so that workflow events will be sent to our Node.js code.

Webhooks

The webhook handler (handleWebhook) that we described in the previous section is written to accept any event type and route it to the appropriate sub-handler in the Node.js code. That simplifies our Ironclad configuration, since we only have to setup one webhook endpoint to handle all events.

Webhook configurations can be setup to handle all workflow event types, or a specific subset. In this case, our handler is only interested in the following event types:

- Workflow Launched

- Workflow Completed

- Workflow Approval Status Changed

- Workflow Updated

- Workflow Cancelled

We could configure the Ironclad webhook to only send these events, but since our code is written to safely ignore any events it is not going to process, we can keep it simple and set up the webhook to receive all events:

Example Webhook Configuration\

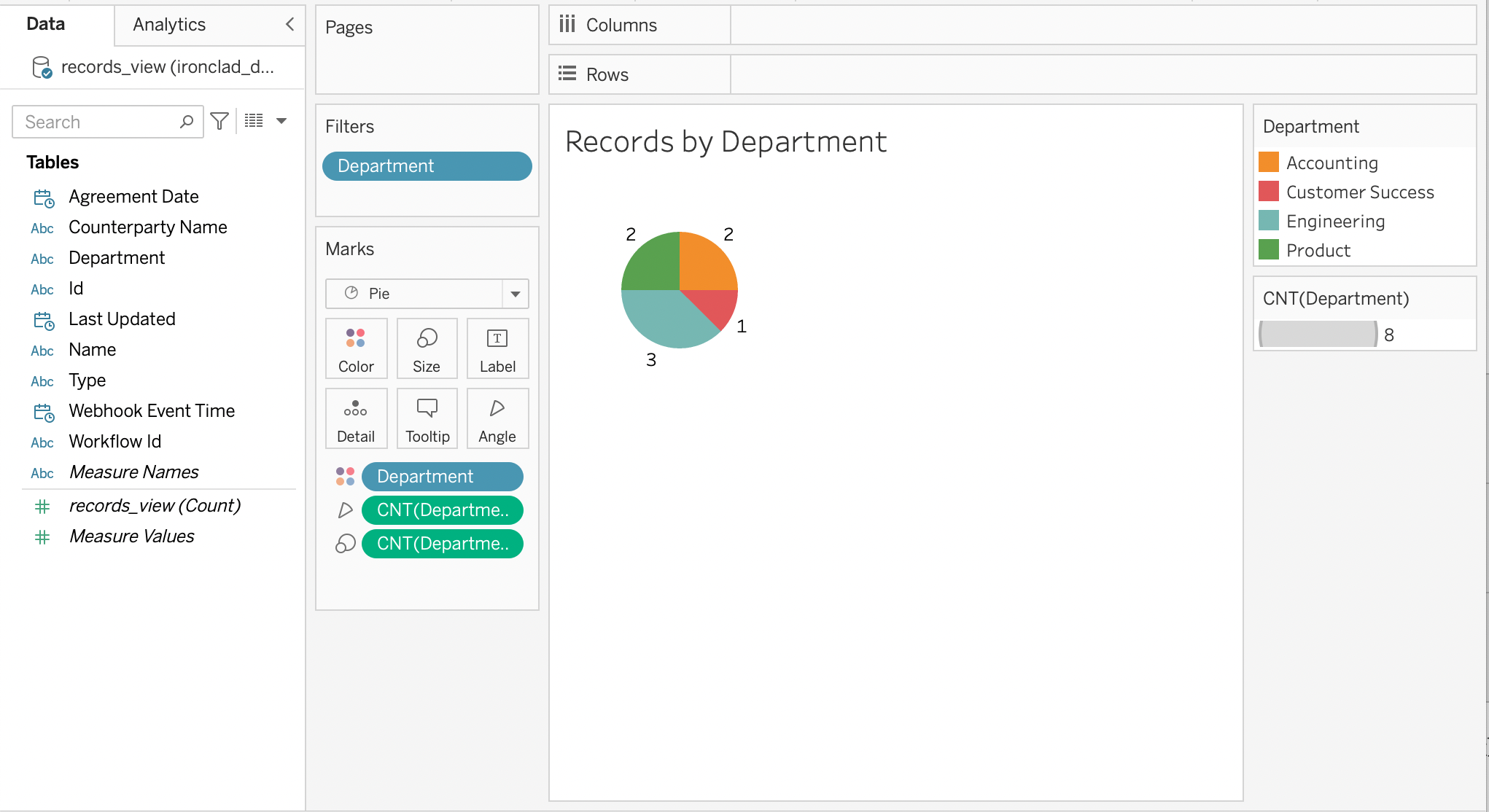

Visualization with Tableau

BigQuery to Tableau

In our example, we use the BigQuery connector provided by Tableau to retrieve data for our visualization. Tableau offers multiple methods and recommendations on connecting, which can be viewed in their documentation.

Examples

Below, you’ll find a few example visualizations using the data and schema we have covered in this guide.

Records by “Department”

The below worksheet shows using the created records view to visualize a breakdown of the number of records by a department field. The department field exists as fields on test workflows used for this guide and was extracted in the records_view view we created earlier in “BigQuery Set Up”.

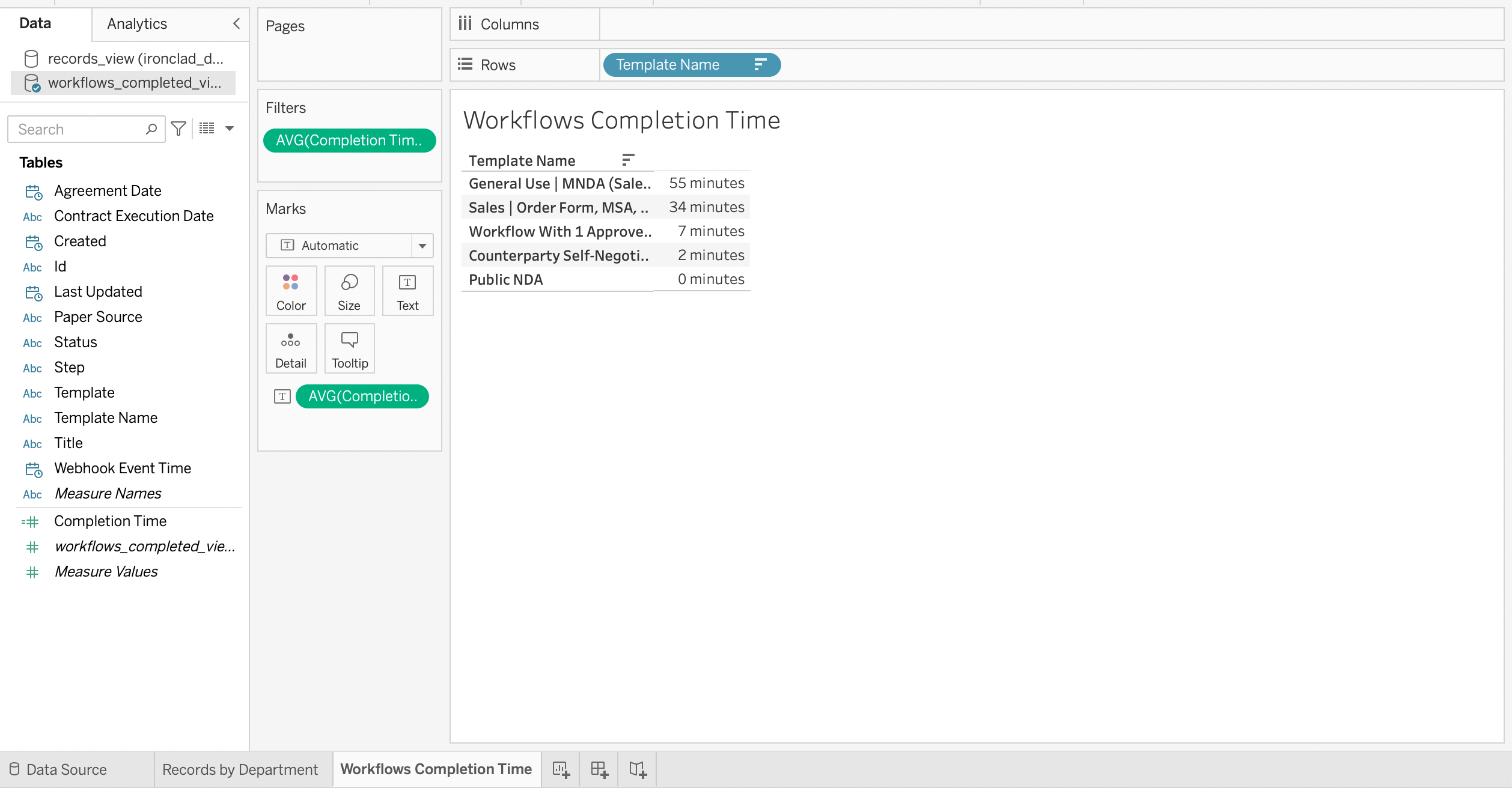

Workflows Completion Time

As long as a signer exists on a workflow, we are able to calculate the timing of workflow completion using the contractExecutionDate and created fields from the workflows_completed_view view in BigQuery.

We use a calculated field (shown below) in our example to calculate the number of minutes it takes for a workflow be completed.

DATEDIFF('minute', [Created], DATETIME([Contract Execution Date]))

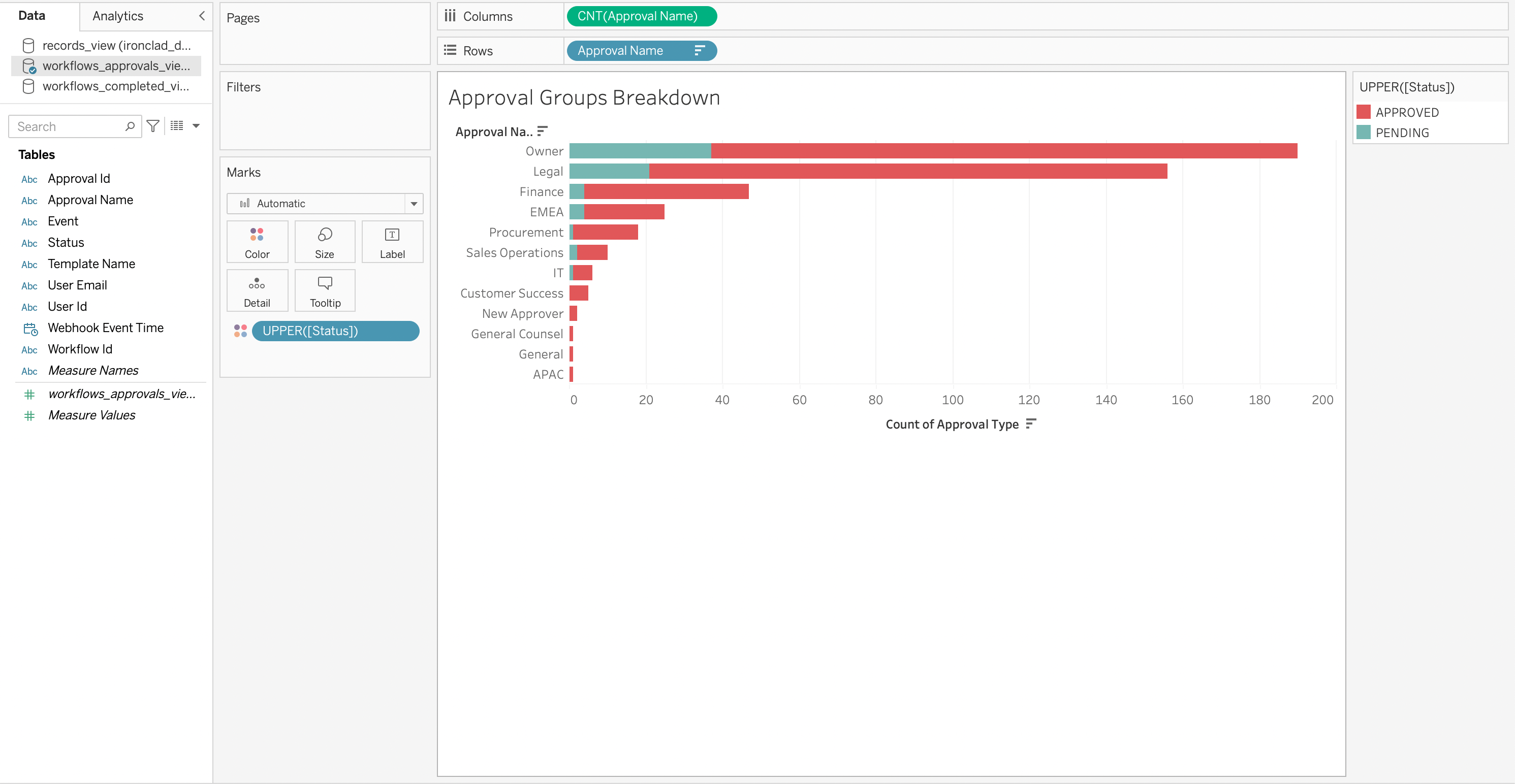

Approval Groups Breakdown

The visualization below shows a breakdown of the number of approval group status changes (with whether they were pending or approved) by approval group used in launched workflows. The data that powers this visualization is with the approval status changes we capture and push to the workflows_approvals view.

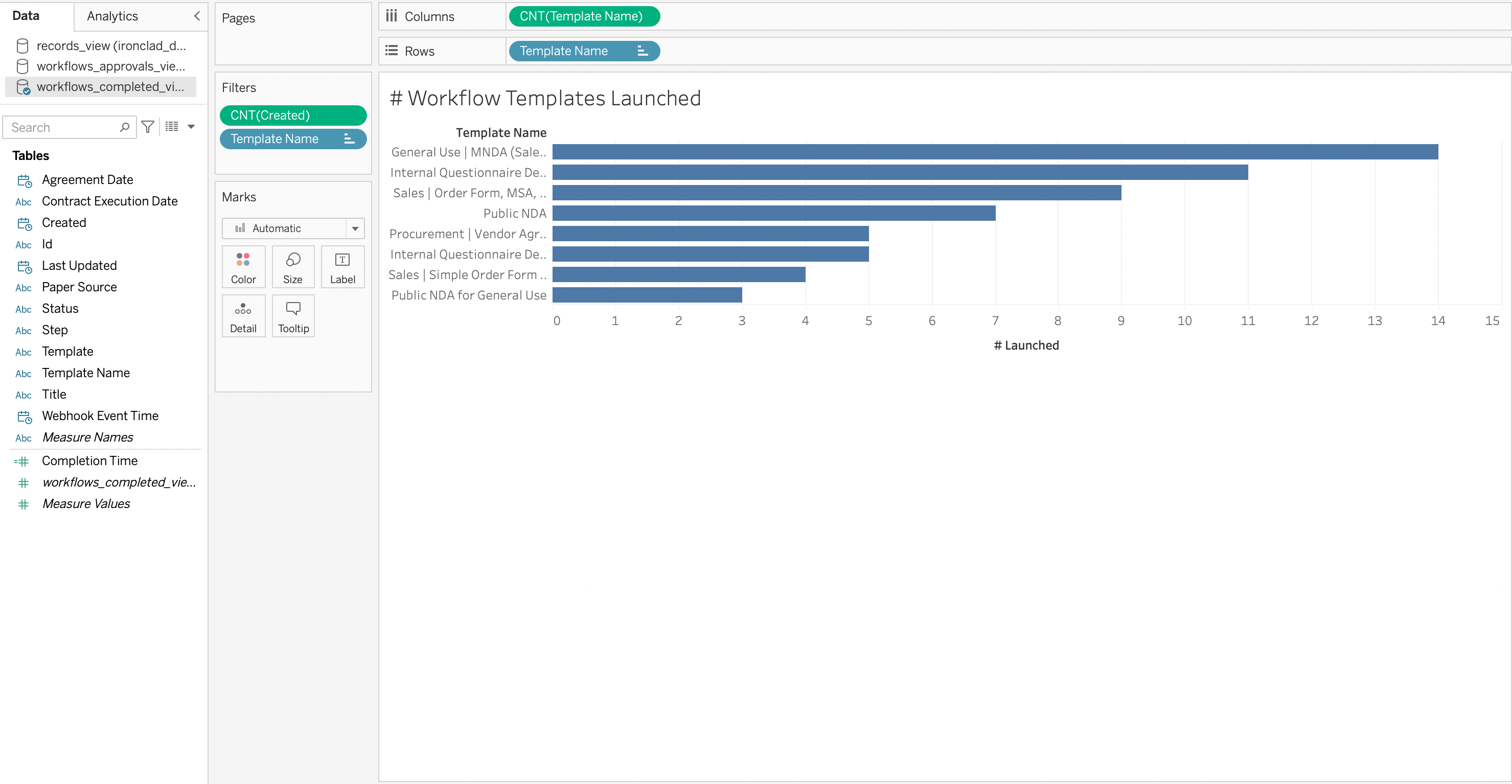

Number of Workflows Completed by Template

The visualization below shows the top templates used in completed workflows. This is using data the in the workflows_completed_view view.

Updated 5 months ago